Immersive Design Studios on Color Grading for Live Event Productions

March 21, 2024

Virtual production is taking off rapidly in Hollywood, but enthusiasm for the format is also pouring over into the professional audiovisual (AV) space. More AV teams are turning to the approach to deliver blockbuster-caliber hybrid event experiences for everything from live concerts to product launches, esports competitions, and beyond.

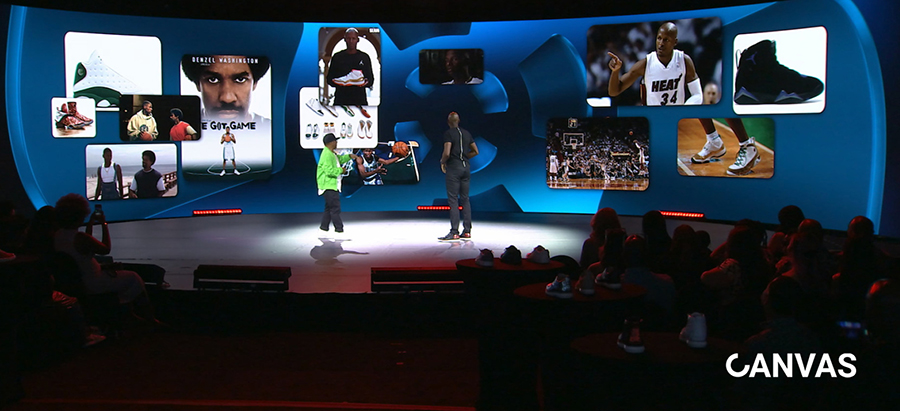

While the technology applied for both is similar, the approaches vary. In on-set environments, cameras can shoot, pause, and reset at will, and issues can be fixed in post, whereas with live, there are no second chances. Immersive Design Studios has carved a niche for itself with its CANVAS platform, which leverages similar game engine and LED wall technology used in virtual production to support live event productions. The company recently prioritized achieving a cohesive look for broadcasts of these events, which is why it started using AJA ColorBox with Assimilate Live Looks for live grading. We chatted with Immersive Design Studios Co-Founder and CEO Thomas Soetens to learn more.

How would you describe Immersive Design Studios?

We’re a software development and design company best known for our hybrid cloud and on-premises production platform, CANVAS. It combines real-time game engine and cloud technology with video capture, playback tools, and AI neural networks to ensure immersive audience experiences. We developed it with performance resources distributed in such a way that clients can leverage the platform without any game engine or media server experience.

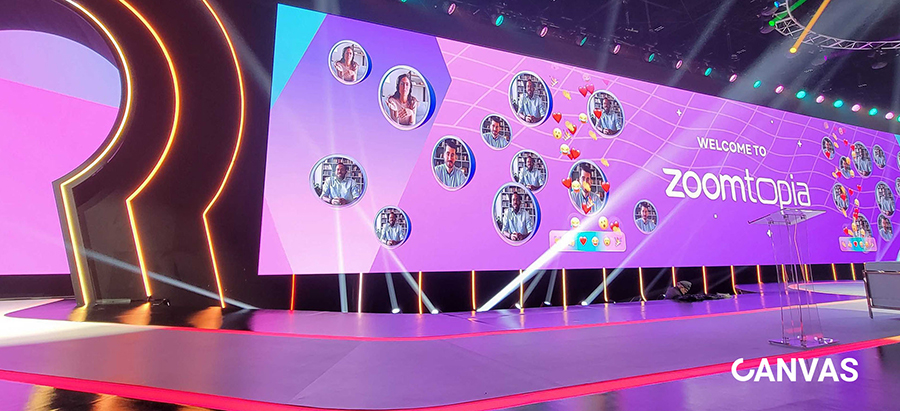

CANVAS harnesses a similar logic to Unreal Engine virtual production workflows but is much more flexible and is designed for live environments. We’ve designed our platform to facilitate a continuous production workflow; if we need to broadcast an event, we can do it for eight hours or more for multiple consecutive days with CANVAS. Sometimes, we even stream out to hundreds of thousands of viewers online. It’s all bidirectionally interactive, so the audience can speak to the host in the studio and vice versa.

Who are your main clients, and what do client interactions look like?

We work with a range of clients who deploy our CANVAS Server, CANVAS iPad app, CANVAS Studio app, CANVAS multi-viewer, and CANVAS streaming technology, and each interface is unique to the client. When we onboard new clients to the CANVAS Platform, they build and customize the interface to their liking based on the experience they want to execute; a client with a boardroom solution is likely going to have different needs and preferences than one doing projection mapping on an ice rink. WORRE Studios, one of our clients, has done several exciting productions for major brands and designers. They recently produced an event that required a live broadcast leveraging different camera brands, and we helped them create a unified look with AJA ColorBox.

Why did you decide to use AJA ColorBox for the project?

We’re constantly brainstorming ideas and testing them. When we started thinking about this project and began working on LUTs, we realized we could use our cinema cameras with AJA ColorBox and Assimilate Live Looks to push the loads. We knew Assimilate would allow us to easily handle and touch up LUTs in real time on different cameras. Once we were in agreement, we ordered multiple ColorBoxes, downloaded the software, and were using them in under a week.

Tell us more about the project and workflow.

WORRE Studios features a circular LED stage comprising four massive LED walls – each 60 feet wide, 14.5 feet high – totaling a combined resolution of 38K. The space accommodates an in-person audience of up to 350 people, who are immersed in the event, as well as the capacity to reach 500,000 potential virtual Zoom attendees. This CANVAS-powered facility has been used by many Fortune 500 companies, celebrities, and more for live hybrid in-person and virtual events. While CANVAS outputs the experiences onto the LED walls, the studio also often produces and distributes a live stream of everything happening in-studio, as was the case for this project.

The workflow is relatively straightforward. We output feeds from ten different cameras through a video mixer and into our AJA ColorBox units. The color converters helped us unify the look and feel of the color to a prespecified LUT the team wanted applied. They essentially sat between every camera and the switcher in a very simple way, introducing essentially zero latency and making them quite handy kit. All we had to do was configure the devices and talk to them over the network, and we got incredibly powerful outcomes. The device is so small and portable, which was great because we could place them anywhere and access them remotely via the browser-based web UI.

On this project, it was important that anywhere we had an interaction between the LED wall, speaker, and virtual production background, the look was uniform to ensure a Hollywood-caliber viewing experience. This is where lookup tables (LUTs) came in to support camera calibrations that moved beyond adjusting the white point and an even balance between the colors. We wanted to create something that would make the remote audience feel as though they were inside a movie.

Just as color grading is part of post-processing for a movie, we used color grading in a live fashion to deliver the experience. This approach gave us far greater control. We unified the cameras, and the entire look and feel of the show was customized based on our color grading decisions for this event and set of cameras.

What challenges did you face?

Any experience we build or support must look uniform when shared with an audience. That can be challenging on a project like this, where you’re working with different camera types, each with its own look. We knew learning multiple camera models and brands, including their different approaches to LUTs and calibration, just wouldn’t be efficient. This is where ColorBox was instrumental. Combined with Assimilate Live Looks, it gave us a centralized way to unify and color grade footage from different camera types with low latency. We were able to swap the look and feel between shots and make every camera source ultimately look the same. It allowed us to deploy multiple LUTs on multiple ColorBoxes, test them, and bring them together seamlessly for a cohesive program output; the device is super flexible.

Was this your first time using AJA Gear?

No, we’ve used AJA gear for years, from the KONA I/O cards to FS frame synchronizers, Mini-Converters, and more. We’ve even started using BRIDGE NDI 3G. It’s a symbiotic fit, given CANVAS can ingest hundreds of NDI streams; most of our clients are using it as a set-and-forget solution for SDI to NDI encoding/decoding needs. We trust AJA as a brand, so we implement it when we need trustworthy conversion. ColorBox is reliable and does what it is supposed to without introducing any unnecessary complexities. That's the magic of AJA; the products are always lean, very focused, and withstand the test of time.

We choose AJA in synergy with the vision of the CANVAS platform technology: reliably enable frictionless execution that maximizes artistic decisions and the audience experience.

About AJA ColorBox

AJA ColorBox is a powerful video processing device designed to perform LUT-based color transformations and offers advanced-level color science with AJA Color Pipeline, as well as several look management approaches, including Colorfront, ORION-CONVERT, BBC, and NBCU LUTs. Featuring 12G-SDI in/out and HDMI 2.0 out, AJA ColorBox is capable of up to 4K/UltraHD 60p 10-bit YCbCr 4:2:2 and 30p 12-bit RGB 4:4:4 output, perfect for live production, on-set production, and post-production work. ColorBox's browser-based user interface makes it simple to adjust color processing settings, whether connecting directly via ethernet or via a third-party WiFi adapter.

About AJA BRIDGE NDI 3G

BRIDGE NDI 3G is a 1RU gateway offering high density conversion from 3G-SDI to NDI, and NDI to 3G-SDI for both multi-channel HD and 4K/UltraHD. Designed to drop into any existing NDI or SDI workflow as a plug and play appliance, BRIDGE NDI 3G brings immense conversion power and flexibility, fully controllable remotely for AV use, security and surveillance, broadcast, eSports, entertainment venues, and a wide range of other facilities needing high quality, efficient NDI encode and decode.

About AJA Video Systems

Since 1993, AJA Video Systems has been a leading manufacturer of video interface technologies, converters, digital video recording solutions and professional cameras, bringing high quality, cost effective products to the professional broadcast, video and post production markets. AJA products are designed and manufactured at our facilities in Grass Valley, California, and sold through an extensive sales channel of resellers and systems integrators around the world. For further information, please see our website at www.aja.com.

All trademarks and copyrights are property of their respective owners.

Media Contact:

Katie Weinberg

Raz Public Relations, LLC

310-450-1482, aja@razpr.com

Mini-Converters

Mini-Converters

Digital Recorders

Digital Recorders

Mobile I/O

Mobile I/O

Desktop I/O

Desktop I/O

Color

Color

Streaming

Streaming

IP Video/Audio

IP Video/Audio

Frame Sync

Frame Sync

openGear

openGear

Routers

Routers

Recording Media

Recording Media

Developer

Developer

Software

Software